The experiment’s participants were politically minded, sure of their ideologies. Which is why, upon learning that they had just expressed support for an issue they actually oppose, many of them tried to insist they must have misread the question. More than a few were flat-out confused. And, perhaps surprisingly, a handful were relieved to find that they were more ideologically flexible than they realized.

“They told us, ‘Thank God I’m not a left-winger,’” says Philip Parnamets, a psychologist who helped design the crafty experiment that would trick its subjects into defending a political view they disagreed with. “They were like, I didn’t know I could think this way.” Which made Parnamets realize something: “You could see this as a tool for self-discovery. It seemed to open up the possibility of change.”

The premise of Parnamets’ experiment — that a simple psychological game could meaningfully alter a person’s political positions — is something most people probably assume couldn’t work on them. Most of us see our ideological viewpoints as the result of thoughtful, objective consideration. “We live in a world where people think political attitudes are sacred things,” says Parnamets, “that they shouldn’t be changeable at all.”

But a growing body of research suggests that’s not true, and that our politics may be far more flexible than we think. “The idea that one arrives at their political beliefs through careful and considered reasoning only is fictional,” says David Melnikoff, a postdoctoral fellow at Northeastern University who studies attitude change. ”Whether it’s about a country, party, policy or politicians, attitudes can be radically changed on the basis of your current stimuli.”

Parnamets’ experiment did just that. He and his colleagues gave their subjects an iPad that contained a series of polar opposite ideological statements. The subjects used their fingers to draw X’s on the spectrum between the two statements to indicate their level of support or opposition to each.

What they didn’t know was that the iPads were programmed to secretly move some of their hand-drawn X’s to different parts of the spectrum. Suddenly, an X drawn next to the statement “I support raising gas taxes” was now closer to “I oppose raising gas taxes.” The researchers then showed the subjects their iPads to see how they would react. Some of them cried foul. But more than half accepted the altered opinions as their own.

Even more remarkably, when asked to explain their thinking behind these opinions, many of the subjects took pains to describe in detail why they had supported a political stance that they hadn’t actually chosen. It was these participants whose political opinions shifted the most dramatically — in fact, their “new” opinions held fast even a week later when the researchers checked in on them again.

“We see a larger attitude change when participants are asked to give a narrative explanation of their choice because they’re then more invested in that view,” says Parnamets. Psychologists call this “choice blindness” — when people have to rationalize a choice they didn’t actually make, their preference can naturally shift toward that choice.

Melnikoff has conducted similar experiments into attitude change, in which participants are primed through exercises to generate positive feelings toward things they don’t actually like. In one such experiment, Melnikoff’s subjects exhibited lower feelings of disgust toward, of all monsters, Adolf Hitler after being told they would have to defend him in court. “All it takes to change someone’s affective response to something is to induce them to have a positively or negatively valenced action toward that person or thing,” says Melnikoff. Even if, intellectually speaking, the subject knows this person or thing is bad, they can still “feel good” about it, like a dieter salivating at the sight of an ice cream sundae they know they shouldn’t eat.

Crushed by negative news?

Sign up for the Reasons to be Cheerful newsletter.

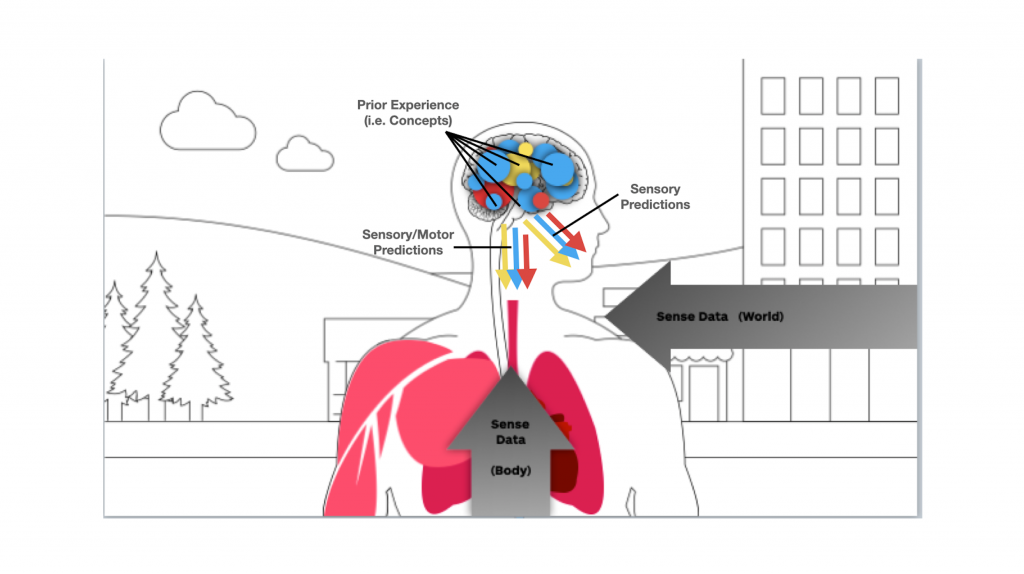

Part of the reason our choices and beliefs can be so easily changed is that our brains have evolved to help us navigate life by avoiding friction and complications. “The brain’s job is to predict, to guide you through an environment without making too many errors, and to help you adapt to that environment,” says Jordan Theriault, a researcher who studies the neural and biological bases of behavior and judgment. “The behaviors people take on and the beliefs they hold are about managing stress and arousal and discomfort.”

Looked at from that perspective, it makes sense that our beliefs should adapt to fit our environments, coalescing into ideologies that make the world feel easier to navigate and understand. You can see this most clearly in partisan politics, wherein an ideology’s potential to bind you together with “your group” may be more important than the ideology itself.

“Part of partisanship is about being part of partisan conflict,” says Theriault. “You have your people and you have the other people you consider yourself against, and that’s an environment where it makes sense to have these beliefs. But if you’re removed from that conflict position, your partisan beliefs may not serve as much of a purpose anymore.”

Removing ourselves from that conflict position is easier said than done in a world where it feels like every politician, pundit and loudmouth on Twitter wants us to do the opposite. But in those rare instances where we can manage to put conflict aside, it’s possible to free ourselves from our rigid political mindsets and see the other’s point of view.

One technique that has gained interest in political advocacy is “deep canvassing.” Traditional political canvassing involves identifying your supporters and making sure they get out and vote — basically, it seeks to leverage partisan feelings to the party’s advantage. Deep canvassing, on the other hand, does the opposite: Canvassers go door to door, but instead of pumping up the passions of their supporters, they listen closely to those who hold opposing views. “What we’ve learned by having real, in-depth conversations with people is that a broad swath of voters are actually open to changing their mind,” Dave Fleischer, one of the technique’s best-known practitioners, told the New York Times Magazine in 2016.

Deep canvassing can leverage the same tribalist power of partisan politics, but turn that power toward finding common ground rather than fighting to the death, according to Theriault. “Just by showing up on someone’s doorstep to talk to them about what they believe, you’re essentially building a new relationship” — a tribe of two — “even though it’s a very short one at the door,” he says. And in an age when so many political affiliations are cultivated online, the face-to-face offering of an olive branch becomes all the more powerful. “It’s difficult to be genuinely listened to on social media,” says Theriault, “so I think being genuinely listened to is a way of building a connection to people — and even working out what you believe, too.”

In essence, deep canvassing functions not unlike Parnamets’ experiment with the iPads. Both encourage their participants to slow down, rethink their initial position, and then, engage in a meaningful narrative about the opposing point of view.

Such narratives have powerful effects on our brains — we are more easily swayed by them than we realize. In his book Behave: The Biology of Humans at Our Best and Worst, neuroscientist Robert Sapolsky writes that simply naming a game the “Wall Street Game” is likely to make players compete more ruthlessly than if they are told the game is called the “Community Game.” Telling doctors a drug has a “95 percent survival rate” makes them more likely to prescribe it than if they’re told it has a “five percent death rate.” Subtle cues can alter even our most cherished beliefs. In one experiment conducted in the U.S., survey respondents were more likely to support egalitarian principles if there was an American flag hanging nearby.

“I think we have an untapped reservoir for flexibility in our attitudes and beliefs,” says Parnamets, “but it’s difficult to access because there are many reasons for holding tightly to beliefs — sense of security, sense of belonging, self esteem — and those might actually close you off to other views, even if you’re the type of person who could actually hold a different belief than the one you’re holding.”

“But if you can have a discussion with yourself, which our method allows you to do,” adds Parnamets, “we see a real possibility of change.”